Remarks: in my demos I’m using 3rd party libs: FluentAssertions and xunit.

Given is a DemoRepository with a method AddProduct that we want to test. (The code kept oversimplified for clarity reasons)

public class DemoRepository

{

...

public void AddProduct(Guid id)

{

_dbContext.Products.Add(new Product { Id = id });

_dbContext.SaveChanges();

}

}

Using Transaction Scopes

EF Core added support for [TransactionScope](https://docs.microsoft.com/en-us/ef/core/saving/transactions#using-systemtransactions) in version 2.1.

The isolation of tests via TransactionScope is very simple just wrap the call AddProduct into a TransactionScope to revert all changes at the end of the test. But, there are few preconditions. The testing method must not starting transactions using BeginTransaction() or it has to use a TransactionScope as well.

Also, I recommend to read my other blog post: Entity Framework Core: Use TransactionScope with Caution!

public DemoRepositoryTests()

{

_dbContext = CreateDbContext();

_repository = new DemoRepository(_dbContext);

}

[Fact]

public void Should_add_new_product()

{

var productId = new Guid("DBD9439E-6FFD-4719-93C7-3F7FA64D2220");

using(var scope = new TransactionScope())

{

_repository.AddProduct(productId);

_dbContext.Products.FirstOrDefault(p => p.Id == productId).Should().NotBeNull();

// the transaction is going to be rolled back because the scope is not completed

// scope.Complete();

}

}

Using new Databases

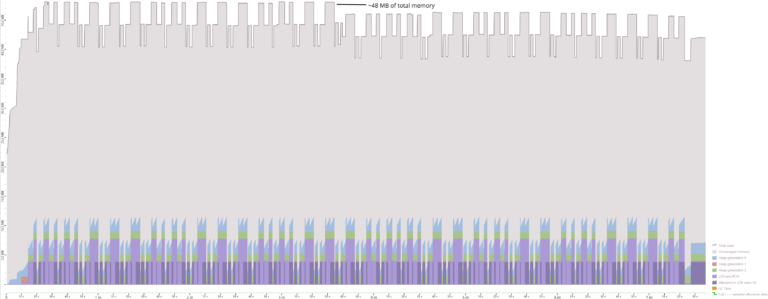

Creating a new database for each test is very easy but the tests are very time consuming. On my machine each test takes about 10 seconds to create and to delete a database on the fly.

The steps of each test are: generate a new database name, create the database by running EF migrations and delete the database in the end.

public class DemoRepositoryTests : IDisposable

{

private readonly DemoDbContext _dbContext;

private readonly DemoRepository _repository;

private readonly string _databaseName;

public DemoRepositoryTests()

{

_databaseName = Guid.NewGuid().ToString();

var options = new DbContextOptionsBuilder<DemoDbContext>()

.UseSqlServer($"Server=(local);Database={_databaseName};...")

.Options;

_dbContext = new DemoDbContext(options);

_dbContext.Database.Migrate();

_repository = new DemoRepository(_dbContext);

}

// Tests come here

public void Dispose()

{

_dbContext.Database.ExecuteSqlCommand((string)$"DROP DATABASE [{_databaseName}]");

}

}

Using different Database Schemas

The 3rd option is to use the same database but different schemas. The creation of a new schema and running EF migrations usually takes less than 50 ms, which is totally acceptable for an integration test. The prerequisites to run queries with different schemas are schema-aware instances of DbContext and schema-aware EF migrations. Read my blog posts for more information about how to change the database schema at runtime:

- Entity Framework Core: Changing Database Schema at Runtime

- Entity Framework Core: Changing DB Migration Schema at Runtime

The class executing integration tests consists of 2 parts: creation of the tables in constructor and the deletion of them in Dispose().

I’m using a generic base class to use the same logic for different types of DbContext.

In the constructor we generate the name of the schema using Guid.NewGuid(), create DbContextOptions using DbSchemaAwareMigrationAssembly and DbSchemaAwareModelCacheKeyFactory described in my previous posts, create the DbContext and run the EF migrations. The database is now fully prepared for executing tests. After execution of the tests the EF migrations are rolled back using IMigrator.Migrate("0"), the EF history table __EFMigrationsHistory is deleted and newly generated schema is dropped.

public abstract class IntegrationTestsBase<T> : IDisposable

where T : DbContext

{

private readonly string _schema;

private readonly string _historyTableName;

private readonly DbContextOptions<T> _options;

protected T DbContext { get; }

protected IntegrationTestsBase()

{

_schema = Guid.NewGuid().ToString("N");

_historyTableName = "__EFMigrationsHistory";

_options = CreateOptions();

DbContext = CreateContext();

DbContext.Database.Migrate();

}

protected abstract T CreateContext(DbContextOptions<T> options,

IDbContextSchema schema);

protected T CreateContext()

{

return CreateContext(_options, new DbContextSchema(_schema));

}

private DbContextOptions<T> CreateOptions()

{

return new DbContextOptionsBuilder<T>()

.UseSqlServer($"Server=(local);Database=Demo;...",

builder => builder.MigrationsHistoryTable(_historyTableName, _schema))

.ReplaceService<IMigrationsAssembly, DbSchemaAwareMigrationAssembly>()

.ReplaceService<IModelCacheKeyFactory, DbSchemaAwareModelCacheKeyFactory>()

.Options;

}

public void Dispose()

{

DbContext.GetService<IMigrator>().Migrate("0");

DbContext.Database.ExecuteSqlCommand(

(string)$"DROP TABLE [{_schema}].[{_historyTableName}]");

DbContext.Database.ExecuteSqlCommand((string)$"DROP SCHEMA [{_schema}]");

DbContext?.Dispose();

}

}

The usage of the base class looks as follows

public class DemoRepositoryTests : IntegrationTestsBase<DemoDbContext>

{

private readonly DemoRepository _repository;

public DemoRepositoryTests()

{

_repository = new DemoRepository(DbContext);

}

protected override DemoDbContext CreateContext(DbContextOptions<DemoDbContext> options,

IDbContextSchema schema)

{

return new DemoDbContext(options, schema);

}

[Fact]

public void Should_add_new_product()

{

var productId = new Guid("DBD9439E-6FFD-4719-93C7-3F7FA64D2220");

_repository.AddProduct(productId);

DbContext.Products.FirstOrDefault(p => p.Id == productId).Should().NotBeNull();

}

}

Happy testing!