The source code for this post can be found here:

https://github.com/thinktecture/feO2x-publishing/tree/main/2023-11-03_data-access-in-native-aot

Introduction

Many .NET projects use Object/Relational Mappers (ORMs) to access databases, the two most famous ones probably being Entity Framework Core (EF Core) and Dapper. Some of them help you with writing SQL Queries in LINQ which adds compile time safety through a statically typed C# model, and all of them help with serialization/materialization of parameters and query response records. EF Core even brings tools with it to manage and execute migrations.

However, these ORMs cannot be used in combination with Native AOT currently (Dapper actually released its first version supporting Native AOT, but not all features are supported yet). Most of them rely on unbound reflection internally which cannot be used in .NET apps that are trimmed and converted to binary code during publishing. But what if you want to benefit from the advantages Native AOT brings to the table and access databases? How do you structure your data access layer when you have to rely on plain ADO.NET with its connections, transactions, commands, and data readers? How do you ensure that your data access code is not convoluted with your business logic? In this post, we will implement a small CRUD Web API with ASP.NET Core and I show you how you can apply the Humble Object pattern to solve these questions so that it almost feels like you are using an ORM. We will implement so-called sessions which encapsulate Npgsql as a plain ADO.NET provider for PostgreSQL.

Gathering Requirements

Let us start with a Minimal API endpoint where we can analyze what we typically do when interacting with an ORM. For this example, I will use EF Core because of its popularity.

app.MapPut(

"/api/contacts",

// The DbContext is passed to the endpoint via Dependency Injection.

async (AppDbContext dbContext, IValidator<UpdateContactDto> validator, UpdateContactDto dto) =>

{

var validationResult = validator.Validate(dto);

if (!validationResult.IsValid)

return Results.BadRequest(validationResult.ToDictionary());

// Here we make a query against the database without explicitly opening the connection

// to the database or starting a transaction.

var existingContact = await dbContext.Contacts.FindAsync(dto.Id);

if (existingContact is null)

return Results.NotFound();

// Here we simply update properties of an in-memory object. The call to SaveChangesAsync

// uses EF Core's Change Tracking feature to generate the UPDATE statement and

// exectute it. All SQL statements will be wrapped in a transaction implicitly.

existingContact.Name = dto.Name;

existingContact.Email = dto.Email;

existingContact.PhoneNumber = dto.PhoneNumber;

await dbContext.SaveChangesAsync();

// Before exiting the endpoint, we do not dispose of the dbContext. This is done

// automatically via the DI container scope of the HTTP request.

return Results.NoContent();

}

);

In the code snippet above, I selected a simplified CRUD Update scenario. We can see an HTTP endpoint that can be targeted via the PUT method – we need to pass in a JSON body that resembles the UpdateContactDto. From the DI container, a validator and, more importantly for our topic at hand, the DB context is injected. After validating the DTO, we simply call dbContext.Contacts.FindAsync to check if the contact to be updated actually exists. If not, we return Results.NotFound, but in case we do, we simply update properties on the entity and then call dbContext.SaveChangesAsync. EF Core uses its Change Tracking feature to determine the properties that were changed, creates a corresponding update statement and executes it against the database. All this is done in an implicit transaction that is active throughout the call to SaveChangesAsync.

And exactly this Change Tracking is the feature that makes EF Core a “full ORM”. Dapper or LinqToDB for example are so called “Micro ORMs”. These types of ORMs do not have a SaveChanges method which will figure out the insert, update, and delete statements – you need to create corresponding DB commands explicitly. There are other features that differ from ORM to ORM, for example Migrations that come with EF Core, global filters, working with or without entities when constructing queries, and many more, but Change Tracking is the central feature that we presume when we talk about a “full ORM”. This is also one of the key features of a Unit of Work according to its description in Patterns of Enterprise Application Architecture (PEAA) by Martin Fowler et al.: it handles (usually one, but sometimes several) transactions, tracks changes, and deals with concurrency issues, so the calling code does not need to deal with it.

We will not implement a real Change Tracker in this blog post – its an advanced topic and comes with its own overhead. Furthermore, we need to address some other things that happen implicitly when we use an ORM:

- The lifetime of the data access object is not handled by the endpoint, but rather by the DI container. For each HTTP request, a DI container scope is created and only one instance of the DB context is created within it. When the response is sent, the scope ends and automatically disposes of the DB context. Our implementation should be manageable by the DI container and should also work with factories where the lifetime is managed by the caller.

- We never need to open a connection to the database or start and handle a transaction explicitly. This is all done by the DB context internally – our implementation should do the same. The connection and transaction should be cleaned up when Dispose(Async) is called.

app.MapPut(

"/api/contacts",

// We pass our IUpdateContactSession to the endpoint via Dependency Injection and let the

// lifetime be handled by the DI container

async (IUpdateContactSession session, IValidator<UpdateContactDto> validator, UpdateContactDto dto) =>

{

var validationResult = validator.Validate(dto);

if (!validationResult.IsValid)

return Results.BadRequest(validationResult.ToDictionary());

// The GetContactAsync method will execute a command to retrieve the contact for us.

// Internally, we will also open the connection here and start a transaction.

// This way, we do not allocate native resources when DTO validation fails.

var existingContact = await session.GetContactAsync(dto.Id);

if (existingContact is null)

return Results.NotFound();

existingContact.Name = dto.Name;

existingContact.Email = dto.Email;

existingContact.PhoneNumber = dto.PhoneNumber;

// Here we implicitly perform an UPDATE command. The subsequent call to

// SaveChangesAsync will commit the internal transaction.

await session.UpdateContactAsync(existingContact);

await session.SaveChangesAsync();

return Results.NoContent();

}

);

Looks pretty similar to the EF Core example to me, except for the explicit UpdateContactAsync call. Let us start implementing our session now.

Implementing Base Functionality

First, we kick off the session implementation with some interfaces:

// IReadOnlySession represents the abstraction of a connection to a third-party system which is

// only used to read data. We will never commit a transaction when using it. We support

// IDisposable and IAsyncDisposable for DI container integration.

public interface IReadOnlySession : IDisposable, IAsyncDisposable { }

// The ISession interface represents a connection which involves a transaction that will be

// committed when calling SaveChangesAsync. Not comitting and disposing the session

// will implicitly roll back the transaction.

public interface ISession : IReadOnlySession

{

Task SaveChangesAsync(CancellationToken cancellationToken = default);

}

I introduced two interfaces that will be used in different scenarios: the IReadOnlySession is used as the base interface for sessions that only queries data. It derives from IDisposable and IAsyncDisposable so that the session can be disposed of by the DI container. ISession in turn has the same API (by deriving from IReadOnlySession) and an additional SaveChangesAsync method which will commit the internal transaction – thus, this type of session should be used in circumstances where we manipulate data. In the end, this design follows the first rule of the Dependency Inversion Principle, stating that clients should only find the members on an abstraction that they actually require – and you will probably never call SaveChangesAsync in an HTTP GET endpoint. Some might also say that this design follows Command-Query Separation (CQS).

When accessing databases with ADO.NET, the main thing that we will need to deal with are commands. Commands will be created, statements and parameters attached to them, and then executed against the database, no matter if we invoke SQL/DML/DDL directly or call into stored procedures/functions. We might execute commands as queries so that a DB data reader is returned, or we can execute them in a way that only returns a scalar value (number of affected rows or the single value of a query).

This means we need to deal with a lot of commands throughout our sessions, and we can use the creation of a command instance as the central point to initialize the database connection and a transaction if necessary. When we follow this pattern, we will implement several sessions throughout the different use cases/features in our code base, so an easy way to share the state for the connection and transaction is the use of a shared base class. Let us write that now:

public abstract class ReadOnlyNpgsqlSession(

NpgsqlConnection connection,

IsolationLevel? transactionLevel = null

) : IReadOnlySession

{

protected NpgsqlConnection Connection { get; } = connection;

protected IsolationLevel? TransactionLevel { get; } = transactionLevel;

protected NpgsqlTransaction? Transaction { get; private set; }

protected bool IsInitialized

{

get

{

if (transactionLevel.HasValue && Transaction is null)

return false;

return connection.State is ConnectionState.Open;

}

}

public virtual async ValueTask DisposeAsync()

{

if (Transaction is not null)

await Transaction.DisposeAsync();

await connection.DisposeAsync();

}

public virtual void Dispose()

{

Transaction?.Dispose();

connection.Dispose();

}

public ValueTask<NpgsqlCommand> CreateCommandAsync(

string? sql = null,

CancellationToken cancellationToken = default

)

{

return IsInitialized ?

new ValueTask<NpgsqlCommand>(CreateCommand(sql)) :

InitializeAndCreateCommandAsync(sql, cancellationToken);

}

private async ValueTask<NpgsqlCommand> InitializeAndCreateCommandAsync(

string? sql,

CancellationToken cancellationToken

)

{

await InitializeAsync(cancellationToken);

return CreateCommand(sql);

}

protected async Task InitializeAsync(CancellationToken cancellationToken)

{

if (connection.State != ConnectionState.Open)

await connection.OpenAsync(cancellationToken);

if (transactionLevel.HasValue && Transaction is null)

Transaction = await connection.BeginTransactionAsync(cancellationToken);

}

private NpgsqlCommand CreateCommand(string? sql)

{

var command = connection.CreateCommand();

command.Transaction = Transaction;

if (sql is not null)

{

command.CommandText = sql;

command.CommandType = CommandType.Text;

}

return command;

}

}

The class ReadOnlyNpgsqlSession above handles the Postgres connection and an optional transaction. You might be asking yourself why we handle a transaction in this base class when I previously stated that read-only sessions do not require one. The thing is that in some circumstances, you might want to start a transaction even in read-only scenarios, for example in the rare circumstance when you need to read uncommitted data. You can only achieve that by explicitly starting a transaction with IsolationLevel.ReadUncommited (even if you will never commit this transaction). For whom is wondering: in every database system that I worked with, a transaction is always involved when a command is executed. If you do not create a dedicated transaction, usually a transaction per executed command will be created implicitly – please be aware of that since this is most likely not what you want when you execute several commands consecutively.

However, by default, the transaction will not be created unless you pass a value other than null to the transactionLevel parameter of the constructor. By the way, you can see here a brand-new feature of C# 12: primary constructors. Instead of defining a constructor explicitly, we simply open parentheses after the class name and specify the parameters of the primary constructor. The C# compiler will then automatically create backing fields for these parameters and you can access them throughout the instance members in your class scope.

Next to the transactionLevel parameter, there is the actual NpgsqlConnection which we will open and optionally create a transaction from. The important method here is the CreateCommandAsync method: we use it to initialize a new command from the connection and optionally open it and create the transaction. Subsequent calls to CreateCommandAsync will simply initialize a new command (actually, the Postgres connection will try to cache the command and reuse it). When a command is initialized, the transaction will be attached to it if required.

As the connection and transaction is handled in this base class, the subclass implementing ISession is pretty simple:

public abstract class NpgsqlSession(

NpgsqlConnection connection,

IsolationLevel transactionLevel = IsolationLevel.ReadCommitted

) : ReadOnlyNpgsqlSession(connection, transactionLevel), ISession

{

public virtual async Task SaveChangesAsync(CancellationToken cancellationToken = default)

{

// We know it is not possible that Transaction is null after passing a

// non-null transactionLevel to the base class constructor.

if (Transaction is not null)

await Transaction.CommitAsync(cancellationToken);

else

throw new InvalidOperationException("The transaction was not initialized beforehand");

}

}

The NpgsqlSession simply derives from our previous ReadOnlyNpgsqlSession. In the constructor, the default transaction level is changed to IsolationLevel.ReadCommitted, and there is the SaveChangesAsync method which simply commits the Transaction from the base class. If this method is not called, for example because an exception was thrown beforehand, then the transaction will be rolled back implicitly, which is an implementation detail of the Npgsql package.

With these two interfaces and two base classes in place, we can now go over to the implementation of the session of our Update-Contact endpoint.

Implementing Sessions for Endpoints

When we reuse the aforementioned base classes, our IUpdateContactSession interface and its implementation for Postgres looks like this:

// Our IUpdateContactSession derives from ISession because we want to manipulate data

// and therefore need to commit the internal transaction.

public interface IUpdateContactSession : ISession

{

Task<Contact?> GetContactAsync(Guid contactId);

Task UpdateContactAsync(Contact contact);

}

// The implementation derives from NpgsqlSession and only needs to deal with the

// use-case-specific members of the interface.

public sealed class NpgsqlUpdateContactSession(NpgsqlConnection connection)

: NpgsqlSession(connection), IUpdateContactSession

{

public async Task<Contact?> GetContactAsync(Guid contactId)

{

const string sql =

"""

SELECT "Id", "Name", "Email", "PhoneNumber"

FROM "Contacts"

WHERE "Id" = $1;

""";

await using var command = await CreateCommandAsync(sql);

command.Parameters.Add(new NpgsqlParameter<Guid> { TypedValue = contactId });

await using var reader = command.ExecuteReaderAsync();

return await DeserializeContactAsync(reader);

}

public async Task UpdateContactAsync(Contact contact)

{

const string sql =

"""

UPDATE "Contacts"

SET "Name" = $1, "Email" = $2, "PhoneNumber" = $3

WHERE "Id" = $4;

""";

await using var command = await CreateCommandAsync(sql);

command.Parameters.Add(new NpgsqlParameter { Value = contact.Name });

command.Parameters.Add(new NpgsqlParameter { Value = contact.Email });

command.Parameters.Add(new NpgsqlParameter { Value = contact.PhoneNumber });

command.Parameters.Add(new NpgsqlParameter<Guid> { TypedValue = contact.Id });

await command.ExecuteNonQueryAsync();

}

private static async Task<Contact?> DeserializeContactAsync(NpgsqlDataReader reader)

{

if (!(await reader.ReadAsync()))

return null;

// We access the columns of a record by their ordinal number (index):

// 0 = Id, 1 = Name, etc.

// See the SQL statement above for the names of the columns

var id = reader.GetGuid(0);

var name = reader.GetString(1);

var email = reader.GetString(2);

var phoneNumber = reader.GetString(3);

return new Contact { Id = id, Name = name, Email = email, PhoneNumber = phoneNumber };

}

}

The IUpdateContactSession interface derives from ISession to inherit the Disposable and SaveChangesAsync members. It adds the two members that we require for our endpoint, GetUserAsync and UpdateUserAsync.

The NpgsqlUpdateContactSession then implements this interface and derives from NpgsqlSession to inherit all the implementations for IDisposable, IAsyncDisposable, and ISession. It effectively only needs to implement the commands for GetUserAsync and UpdateUserAsync. Each of these methods uses the CreateCommandAsync method of the base class to obtain a command instance – remember that this call will open up the internal connection and transaction if necessary. Parameters are then added to the commands to avoid SQL injection. After command execution, the returned data from the query is deserialized if necessary.

As you can see, the implementation takes roughly 50 lines of code – while working with an ORM is probably easier, the result is still good in terms of readability and maintainability. We also have avoided Spaghetti Code – our business logic in the Minimal API endpoint is loosely coupled with the data access logic via the use of interfaces. To get everything working, we simply need to register our session with the DI Container:

var connectionString = webApplicationBuilder.Configuration.GetConnectionString("Default");

if (string.IsNullOrWhiteSpace(connectionString))

throw new InvalidDataException("Could not find default connection string in app settings");

webApplicationBuilder

.Services

.AddSingleton<NpgsqlDataSource>(sp =>

new NpgsqlDataSourceBuilder(connectionString)

.UseLoggerFactory(sp.GetRequiredService<ILoggerFactory>())

.Build())

.AddScoped(sp => sp.GetRequiredService<NpgsqlDataSource>().CreateConnection())

.AddScoped<IUpdateContactSession, NpgsqlUpdateContactSession>();

Here, we register an NpgsqlDataSource, the default object in Npgsql for handling connections and commands since version 7, as a singleton to the DI container. The connection as well as our session is then registered with a scoped lifetime – the former is created from the aforementioned data source and injected into our session.

You want to implement another endpoint? Just create a new session interface for it, derive either from IReadOnlySession if you only read data, or from ISession if you want to manipulate data. Add methods to your session (typically one for each call to the third-party system, sometimes I also add methods for registering/unregistering entities if my session implementations can access a change tracker). Then you can implement it using the data access framework of your project. This provides a nice, easy to understand abstraction between your business logic and your data access code that ensures the former can be unit-tested in-memory without requiring a database or special features like EF Cores In-Memory provider (which is of limited use since it has no support for transactions amongst other shortcomings).

Classification of Sessions

The initial idea for sessions came to me when I tried to unit test code which included calls to databases (or other third-party systems). To solve the issue, I followed the Humble Object pattern as defined in xUnit Test Patterns by Gerard Meszaros. It simply states: “how can we make code testable when it is too closely coupled to its environment? We extract the logic into a separate easy-to-test -component that is decoupled from its environment.” What you end up with is also described in the pattern: “As a result, the Humble Object component becomes a very thin adapter layer that contains very little code.” And: “The Humble Object code is typically so simple that we often don’t bother writing tests for it because it can be quite difficult to set up the environment need to run it.”

And this is exactly what our sessions do: the data access code is moved into a separate, loosely coupled class which we can replace with a Test Double in our unit tests. This frees us from setting up and managing a database in our automated tests. No matter if you write your own test doubles or use NSubstitute or Moq: the fact that you will not cross the process boundary speeds up our test suite. And the 50 lines of code of our session are pretty easy to understand so that we can verify it by using a code review.

You might be asking if our session is a Unit of Work? My answer to this is “it depends”: in the example above, it is not, because we do not perform any change tracking, which is one of the core features a Unit of Work needs to support according to its description in PEAA. However, sessions are not strictly tied to using (PostgreSQL) ADO.NET – you can use any data access technology that you like, for example Entity Framework Core. You would structure the use-case-specific methods on your interface differently (e.g. for registering/unregistering entities instead of executing an INSERT or DELETE command), but this session would work exactly the same way in that it isolates the business logic from the concrete data access technology – and it would involve Change Tracking in this case.

But Kenny, is a session the same thing as the Repository pattern? No, it is not. Edward Hieatt and Rob Mee in PEAA as well as Eric Evans in Domain-Driven Design specify that a repository is an object that encompasses one type of entities, looks like a collection to its callers, and has the ability to query, materialize, and save said entities. EF Core’s DbSet<T> or LinqToDB’s ITable<T> is an implementation of the repository pattern – but this does not fit our sessions at all. First of all, we do not encompass only a single entity (in our example above, we only deal with contacts, but this is just for the sake of simplicity – when implementing a session, you can access any table that you need for your current use case/feature). Secondly, our session does not behave like a collection. Thirdly, while it offers the ability to query elements (a contact by its ID in our example), the caller is not able to query arbitrarily like you can with the IQueryable<T> extension methods on a DbSet<T> or ITable<T>. The actual query is hidden from the caller.

In conclusion: a session is not a Repository, maybe a Unit of Work, but definitely a Humble Object.

General Guidelines for Sessions

While we have implemented a session and classified it in the broader context of data access patterns, there are some guidelines I like to follow in my projects.

First of all, I like to have a single session per third-party system and per endpoint. The session then encapsulates all interactions with one third-party system. While it is technically possible with our implementation to have several session instances from different classes that share the same connection, it usually leads to more convoluted code in my opinion – I usually prefer to have all database interactions for a single feature listed in one place.

We also did not touch on the topic of Multi-Threading in the context of sessions so far. In general, this is not necessary because sessions are usually used in a sequential manner. However, in case you have a complex algorithm that involves concurrent database I/O, I would argue to instantiate the same session once per task or thread. The underlying connection pooling that is implemented on the ADO.NET provider level is the easiest way to synchronize access to a connection and its commands. In conclusion, you should keep thread synchronization out of your session implementations unless you absolutely have to.

Furthermore, we implemented our business logic directly in the Minimal API endpoint. You might want to place this logic in services or in Mediator handlers – but this does not really change the use of sessions. Instead of injecting a session into the endpoint, you can simply inject it in other places. If your endpoint is complicated and you decide to split the business logic across several services, you might want to create different interfaces for the different services (following the Dependency Inversion Principle), but then implement all in one session class (following the Interface Segregation Principle).

But Kenny, what if I have the same query in different sessions? You just told me that I should have a single session per endpoint/feature, how do I share code between them? An example for this would be a GetContactAsync method which retrieves a contact by ID – we can easily imagine that this query is executed in different endpoints. The answer is to place a shared implementation in a static method and forward calls to it in each session implementation. This way, you get the benefit of having all I/O interactions for a complex endpoint listed in its implementation while avoiding duplicate code.

If you share code between session: do not forget to switch to custom implementations when the requirements of the different session callers begin to differ over time. When you have several related problems, keep the option in mind that you can write several methods where each of them solves one of them, instead of packing everything into a single method which becomes hard to read because of the number of parameters, its cyclomatic complexity, etc. Of course, this highly depends on the context, but it is a thing developers tend to forget: generalizing code into an abstraction is easy, but more often then not, and I include myself here, too, we tend to forget that a reused abstraction can be dissolved into several methods, objects, etc. when the requirements of the callers differ over time. Instead we build more complexity into the same abstraction that becomes harder to maintain. Dan Abramov even gave a whole conference talk on the topic.

More Features in the Sample Code

If you take a look at the accompanying code, you can find some more advanced features. Let us quickly discuss how you can effectively navigate the code.

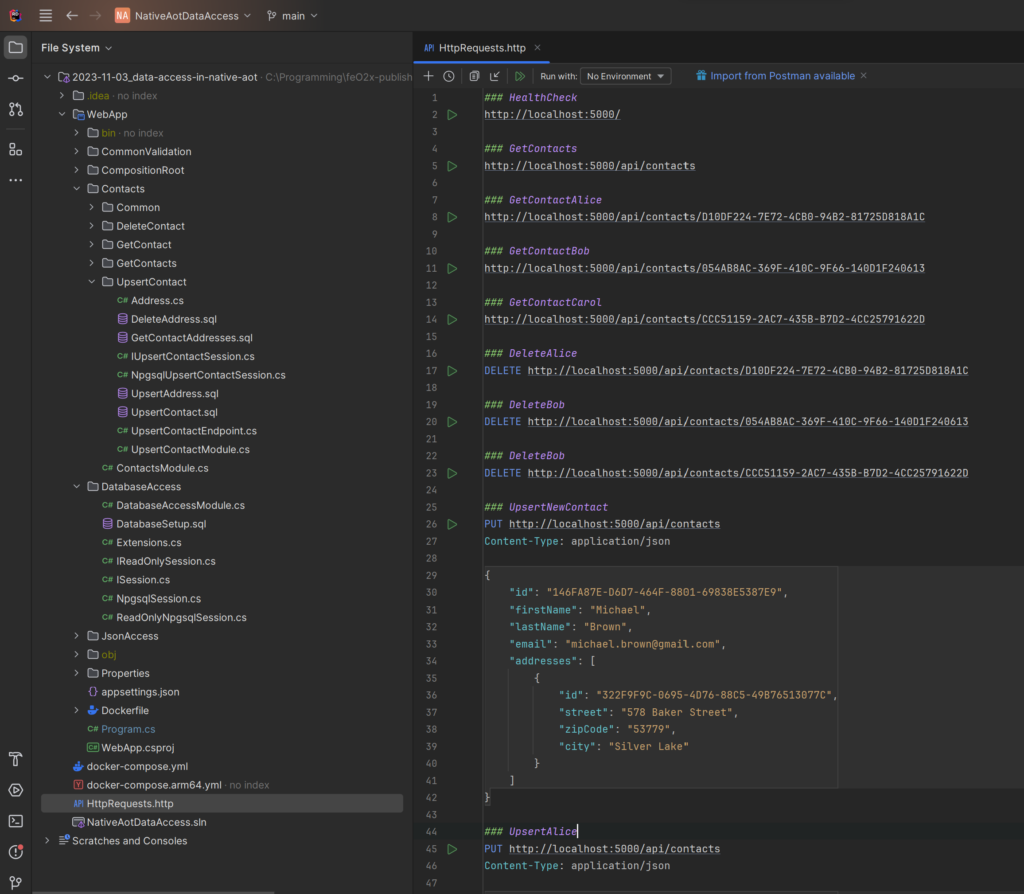

When you open up the solution file NativeAotDataAccess.sln, you will find a single ASP.NET Core Web API project called WebApp. This project is organized in vertical slices – the most interesting part for you are the DatabaseAccess folder (containing the base classes for sessions and general setup code for the data access layer) as well as Contacts and all its subfolders where different sessions are implemented for the endpoints. If you want to debug locally, you should set up a Postgres database and add the corresponding connection string to appsettings.Development.json (which is automatically ignored by git). The easier thing to do is just call docker compose up -d in the solution folder when you run on an x64 device, or docker compose -f docker-compose.yml -f docker-compose.arm64.yml up -d when you run on an ARM64-based device. The WebApp uses a Dockerfile similar to the one described in my previous article. Afterwards, you can use the HttpRequests.http file to execute requests against the different endpoints.

One of the advanced features in the sample is the use of Npgsql’s ability for batching. The great benefit of the Change Tracking mechanism of a full ORM is that you can easily perform changes in memory by setting properties, and the ORM will only create a single command with several INSERT, UPDATE, and DELETE statements, which of course reduces the number of round trips required to the database. A Micro ORM or plain ADO.NET tends to be more chatty as each command execution will result in a round trip. However, Npgsql actually has a mechanism to prevent that: NpgsqlBatch. Instances of this class allow you to track several NpgsqlBatchCommand objects which will be executed only when you call ExecuteNonQueryAsync/ExecuteReaderAsync on the batch. In the sample code, go to the WebApp/Contacts/UpsertContact folder to find the UpsertContactEndpoint which in turn uses the NpgsqlUpsertContactSession – this shows you how to batch commands and only execute them once SaveChangesAsync is called. Although DbBatch has been generally available since .NET 6 in ADO.NET, only few providers have implemented this functionality, namely Npgsql and MySqlConnector. Microsoft SQL Client’s implementation for batching is lagging behind.

Furthermore, you can see several other pieces of code like Source Generators for System.Text.Json in the JsonAccess folder as well as the use of FluentValidation and Polly.Core – these are not strictly related to Sessions, but you can see how they are used in the context of a Native AOT app. You can also see that the SQL queries are no longer directly embedded into the corresponding session C# files. Instead, they are placed into actual .sql files which makes it easier to directly execute them. They are processed as embedded resources by the build engine and retrieved via the small library Light.EmbeddedResources I wrote a few years back.

Conclusion

We started out with the with the need for a data access pattern providing a similar developer experience as full ORMs like Entity Framework Core do. We followed the Humble Object Pattern to create the concept of a session which performs some of the DbContext tasks (transaction management, disposing native resources) and put the corresponding functionality in two base classes. When we need to access data on a specific endpoint, service, or mediator handler, we can create an interface deriving from IReadOnlySession or ISession, specify all the interactions with the third-party system, and then implement this interface in a class which actually interacts with plain ADO.NET. Our business logic is clearly separated from the data access logic.

However, you might have noticed that these sessions are not strictly tied to using ADO.NET. You can use any data access technology to implement sessions, making them a versatile tool for loose coupling between your core logic and your data access layer. Together with concepts like Vertical Slices, it helps you organize the files in your projects in a consisting, scaling manner.