The source code for this post can be found here.

Introduction

The .NET 8 release is around the corner and will bring one new feature to the table for ASP.NET Core: Native Ahead-Of-Time compilation, or Native AOT in short. Usually, when building and deploying your ASP.NET Core app, your C# code is first compiled by Roslyn (the C# compiler) to Microsoft Intermediate Language (MSIL). At runtime , the Just-In-Time compiler (JIT) of the Common Language Runtime (CLR) then compiles MSIL to native code for the platform it is running on (following the famous “Compile once, run everywhere” principle). Native AOT will compile your code directly to native code for a selected target platform – no more JIT is involved at runtime. Microsoft promises less application size on disk, faster startup times and less memory consumption during execution.

In this post, we will explore the benefits and drawbacks of Native AOT from a general perspective, so let us dive into it.

Let's go native

To start off, I create a .NET 8 ASP.NET Core empty web app project and activate Native AOT by setting the PublishAot MSBuild property in the csproj file. I also disabled <ImplicitUsings> so that you can see the actual using statements of referenced code, and run in <InvariantGlobalization> mode to remove culture-specific overhead and simplify deployment – you can read more about it here.

When you run your app now, e.g. from your IDE or by using dotnet run, you will experience no difference. Native AOT only compiles to native code when you publish your app. In this introductory post, I want to make some measurements to determine the difference between Native AOT and JIT CLR deployments. For that, I will slightly modify Program.cs:

<Project Sdk="Microsoft.NET.Sdk.Web">

<PropertyGroup>

<TargetFramework>net8.0</TargetFramework>

<Nullable>enable</Nullable>

<PublishAot>true</PublishAot>

<InvariantGlobalization>true</InvariantGlobalization>

<DefineConstants Condition="'$(PublishAot)' == 'true'">

$(DefineConstants);IS_NATIVE_AOT

</DefineConstants>

</PropertyGroup>

</Project>

using System;

using System.Diagnostics;

using Microsoft.AspNetCore.Builder;

long initialTimestamp = Stopwatch.GetTimestamp();

var builder =

#if IS_NATIVE_AOT

WebApplication.CreateSlimBuilder(args);

#else

WebApplication.CreateBuilder(args);

#endif

var app = builder.Build();

app.MapGet("/", () => "Hello World!");

await app.StartAsync();

TimeSpan elapsedStartupTime = Stopwatch.GetElapsedTime(initialTimestamp);

Console.WriteLine($"Startup took {elapsedStartupTime.TotalMilliseconds:N3}ms");

double workingSet = Process.GetCurrentProcess().WorkingSet64;

Console.WriteLine($"Working Set: {workingSet / (1024 * 1024):N2}MB");

await app.StopAsync();

The first thing to note is the use of WebApplicaiton.CreateSlimBuilder instead of WebApplication.CreateBuilder in Native AOT mode – this will reduce the number of ASP.NET Core features being registered by default, decreasing the application size and memory usage. I inserted calls to Stopwatch.GetTimestamp and Stopwatch.GetElapsedTime to measure the startup time of the web server. Please notice that I also changed the call from app.Run to app.StartAsync – this way, we will not block the executing thread until the web app actually shuts down, but only until the HTTP server starts up successfully. Additionally, we get the WorkingSet64 RAM usage value of our process and print out the used memory in megabytes. After measuring, we shut down the web app gracefully with app.StopAsync.

Let us now publish the app twice, once with Native AOT enabled and once in regular CLR mode (simply execute these statements in a bash/terminal next to your csproj file):

# If you follow along, please execute the following statements in the same "WebApp" folder

# This call will have PublishAot set to true because we defined it previously in the csproj file

dotnet publish -c Release -o ./bin/native-aot

# For this call, we will explicitly set PublishAot to false via cmd arguments

dotnet publish -c Release -o ./bin/regular-clr /p:PublishAot=false

You will notice that the first command took way longer than the second one because of generating native code. When you navigate to the ./bin/native-aot subfolder and take a look at its contents, you will see that there is only a single EXE file next to the debug symbols (.pdb) and the appsettings.json files – all DLLs have been merged into the executable because Native AOT involves Self-Contained, Single-File Deployment and Trimming. Just compare that to the contents of the regular-clr sub-folder where you can find the DLLs right next to the smaller EXE file.

# On Ubuntu 22.04:

bin

├─ native-aot

│ ├─ WebApp # Executable, 9.79MB

│ ├─ WebApp.dbg # debugging symbols, 33.02MB

│ └─ appsettings.json # 142B

└─ regular-clr

├─ WebApp # Executable, 70.96KB

├─ WebApp.deps.json # runtime and hosting information, 388B

├─ WebApp.dll # Compiled code of our project, 7KB

├─ WebApp.pdb # debgging symbols, 20.5KB

├─ WebApp.runtimeconfig.json # runtime information, 600B

├─ appsettings.json # 142B

└─ web.config # IIS configuration, 482B

# On Windows 11

bin

└─ native-aot

├─ WebApp.exe # Executable, 8.77MB

├─ WebApp.pdb # debugging symbols, 102.83MB

└─ appsettings.json # 151B

# The regular-clr folder on Windows is omitted here, it contains the same files with roughly the same sizes

After setting the app up, we can now perform some measurements regarding the things that Microsoft claims it improved in Native AOT: startup times, memory usage, and application size. Please keep in mind that all these values highly depend on the actual implementation, e.g. on the amount of objects you allocate, the statements you execute during startup, and the frameworks and libraries that you reference. The upcoming charts only resemble our small bare-bones app – nonetheless, we can use it to get a grasp of the benefits and how they perform on different platforms.

Startup times

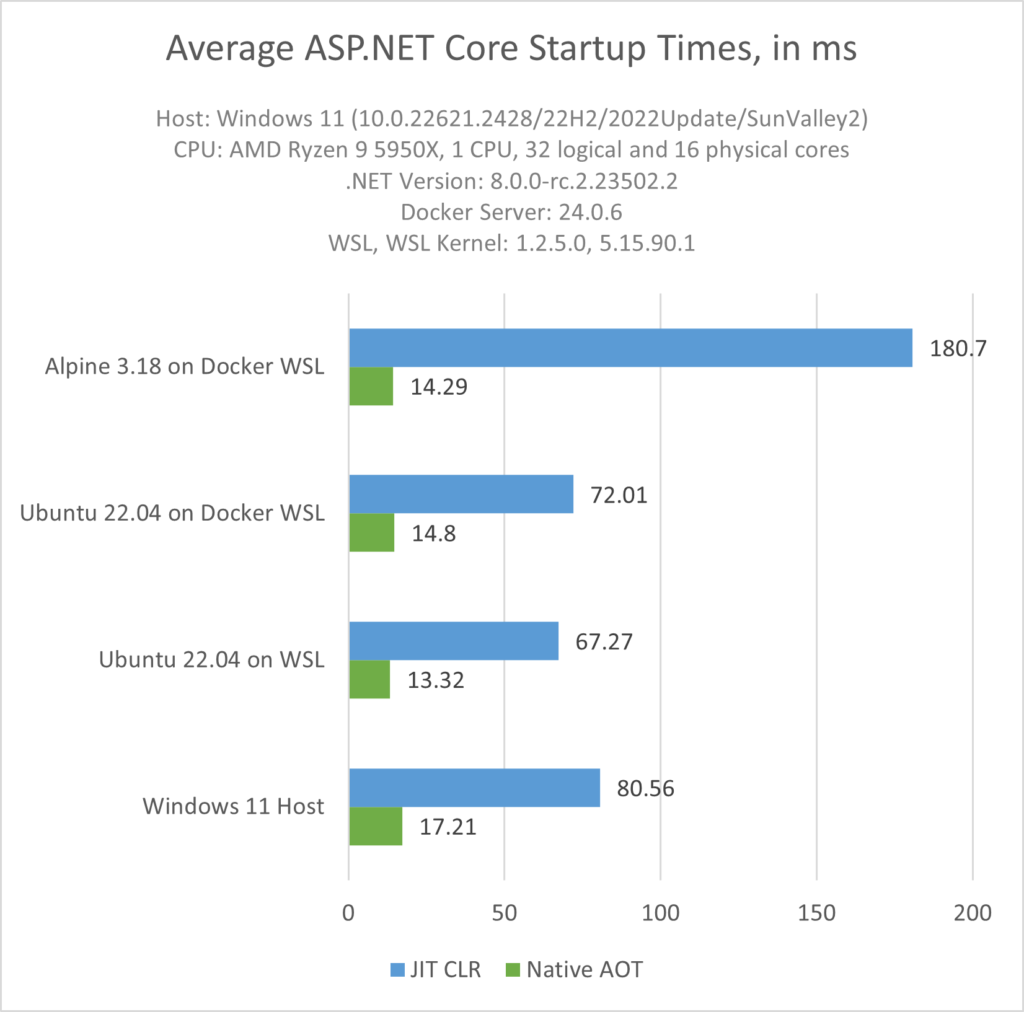

Now, we can execute the apps several times to get a grasp of the startup times. I started each app at least 10 times and then averaged the resulting values. My initial tests were executed on the following targets:

- Ubuntu-22.04-Jammy-based Docker container (running on WSL 2 based Engine)

- Alpine-3.18-based Docker container (running on WSL 2 based Engine)

- Ubuntu 22.04 on WSL 2

- Windows 11

As we can see, the startup times for Native AOT are around 14ms on Linux and roughly 17ms on Windows 11. For the regular CLR deployment, the times are about 70ms on Ubuntu and 80ms on Windows. There is a massive outlier with ~180ms for the Alpine-3.18-based Docker container running the regular CRL build, so I re-executed an equivalent test on a Raspberry Pi 4 – this way we also get a grasp of the startup times on a less powerful machine:

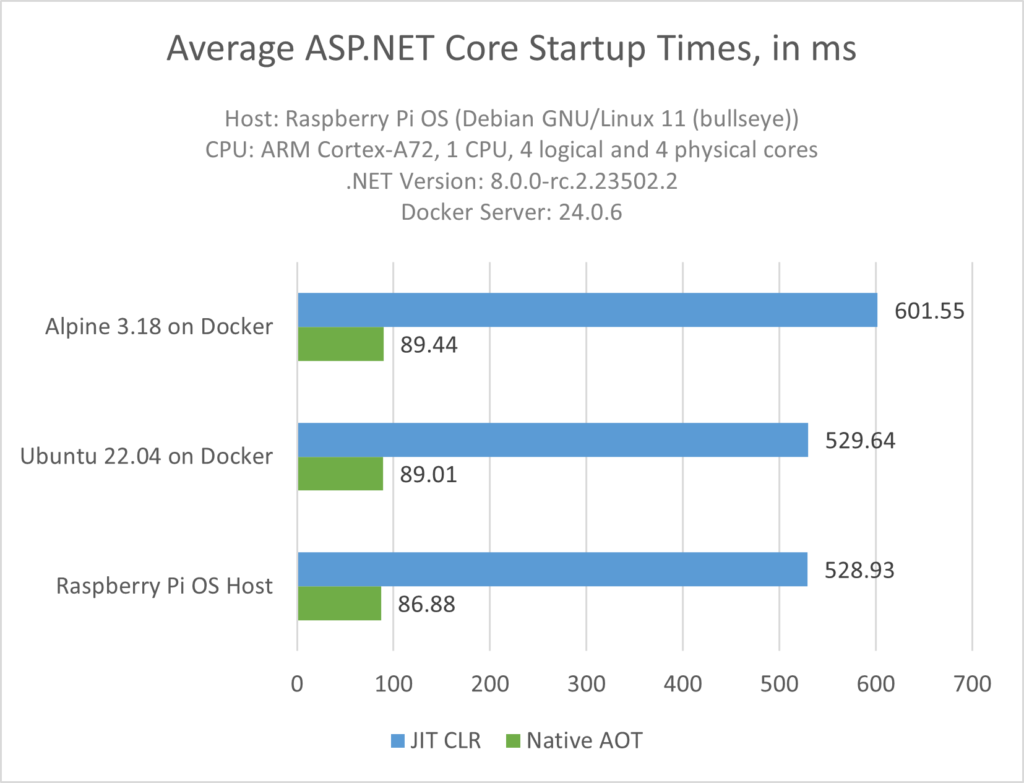

On the ARM Cortex-A72 chip of the Raspberry Pi 4, Native AOT startup time is just short of 90ms. The startup times for the regular CLR build are at around 530ms. We still have an outlier with the regular CLR build running in an Alpine 3.18 Docker container at around 600ms (I also checked this on a dedicated Ubuntu 22.04 VM in Azure and got the same results as on Windows).

Overall, the results indicate that Native AOT startup time is about four to six times faster than running with the regular CLR with a JIT (if we count out the Alpine outlier).

Memory Usage

Let us take a look at the memory consumption of our ASP.NET Core app:

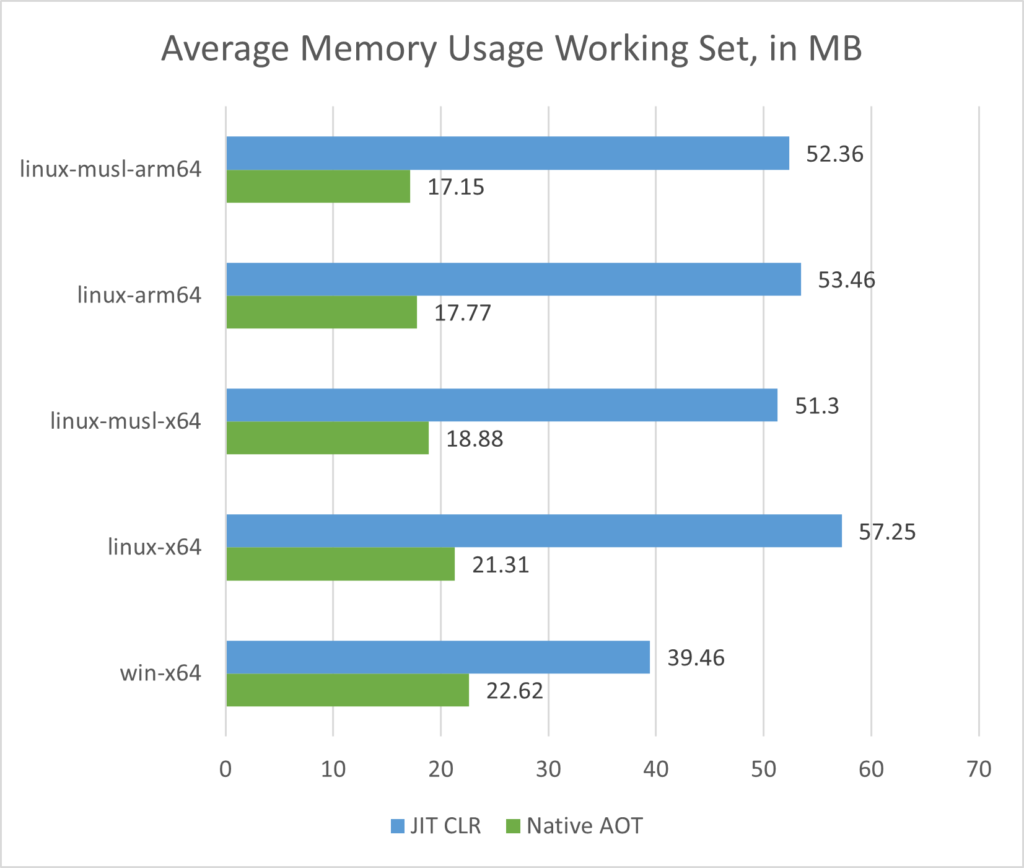

As we can see, the results differ a little bit more depending on the underlying architecture. For Native AOT, the ARM-based apps need the least amount of memory at around 17MB to 18MB, while on x64 based Linux, the working set ranges from roughly 19MB to 21MB. Windows x64 has the largest working set just short of 23MB.

Compared to the regular CLR builds, these are still great improvements: on Linux, the working set is reduced by more than 50% because the regular CLR builds take more than 50MB of memory. On Windows, the CLR JIT memory consumption is at slightly less than 40MB, so the gains are not that big. Keep in mind, these are only the numbers directly after starting Kestrel (the HTTP server of ASP.NET Core) – these values will greatly differ depending on the allocations you perform during program execution (there are no changes to the .NET Garbage Collector for Native AOT).

Application Size

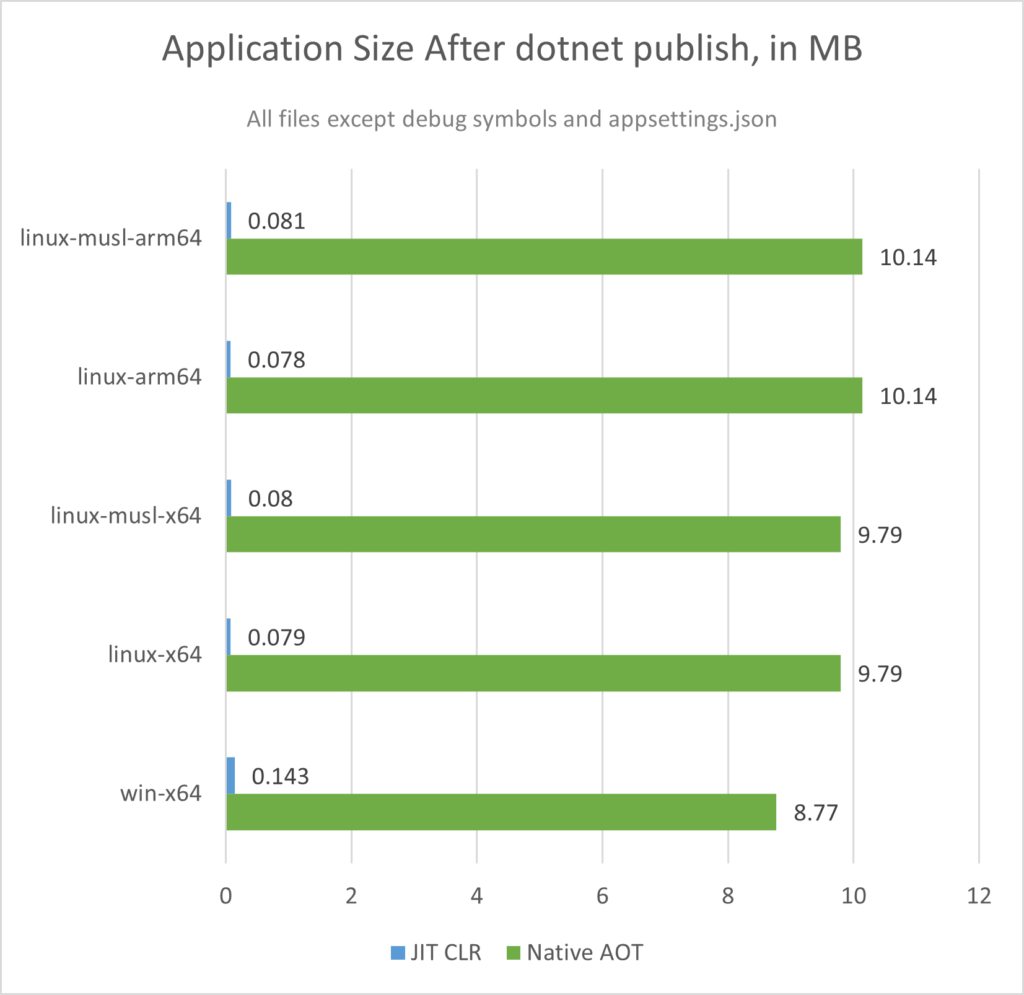

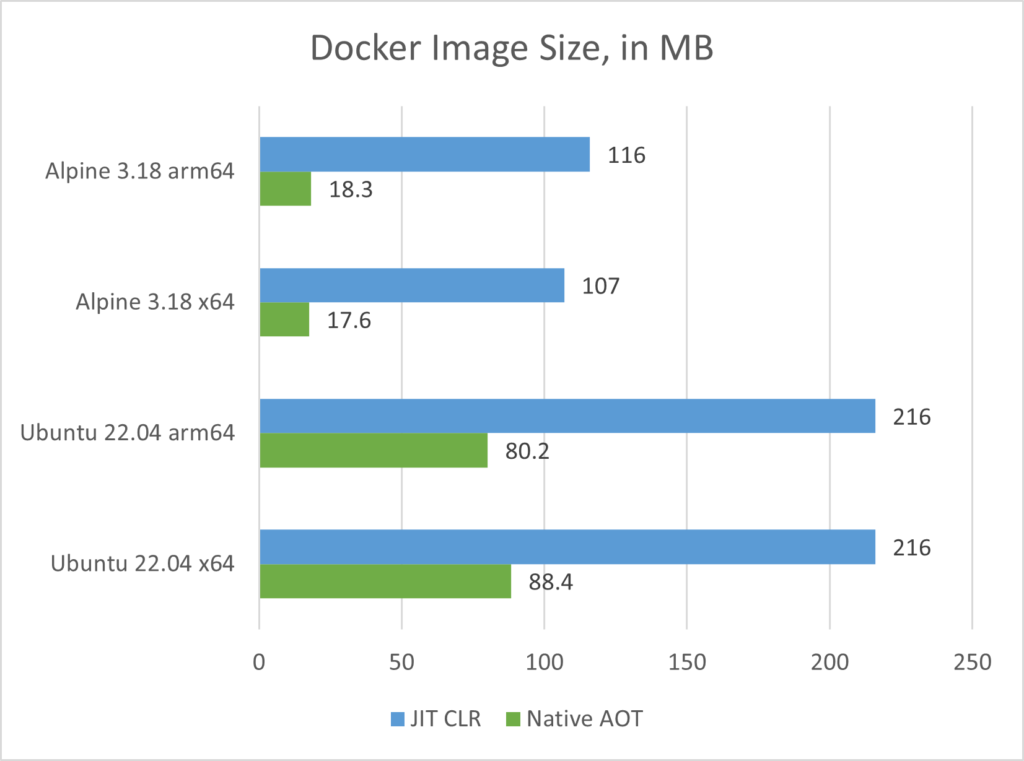

And what about application size? How large are the apps after calling dotnet publish?

Only compiling to MSIL results in very small binary sizes as we can see in the picture above. The regular CLR deployments are only around 82kB in size on Linux and 145kB on Windows. In contrast, Native AOT requires around ~10MB on Linux and ~9MB on Windows. However, Native AOT comes completely packed with all the required runtime components, so when we add the actual dependencies to run our app, the situation changes drastically. Let us take a look at the Docker image sizes for that:

In the diagram above, we see that especially the Alpine-based Docker images are really small when using Native AOT. Around 18MB is a phenomenal value for images that contain our barebone ASP.NET Core app, which is around 15% of the image size with the regular CLR build. Similar things can be said about Ubuntu-based images: they range between 80MB to 90MB, which is less than 40% of the regular 216MB image size. All this reduces payloads for deployments, especially in Cloud-Native scenarios.

Dockerfile for Native AOT

You might be wondering how you can build the aforementioned Alpine-based Dockerfile for the Native AOT by yourself? Let us take a look:

FROM alpine:3.18 AS prepare

WORKDIR /app

RUN adduser -u 1000 --disabled-password --gecos "" appuser && chown -R appuser /app

USER appuser

FROM mcr.microsoft.com/dotnet/sdk:8.0.100-rc.2-alpine3.18 AS build

RUN apk update && apk upgrade

RUN apk add --no-cache clang build-base zlib-dev

WORKDIR /code

COPY ./WebApp.csproj .

ARG RUNTIME_ID=linux-musl-x64

RUN dotnet restore -r $RUNTIME_ID

COPY . .

RUN dotnet publish \

-c Release \

-r $RUNTIME_ID \

-o /app \

--no-restore

FROM prepare AS final

COPY --chown=appuser --from=build /app/WebApp ./WebApp

ENTRYPOINT ["./WebApp"]

In the first “prepare” stage, we will start from a basic Alpine 3.18 image which uses the root user by default. To avoid running our web app with privileged rights, we first create an /app directory where the compiled web app will be copied to and then run the adduser command to create a standard user with less privileges. We use the following arguments:

- -u 1000 sets the ID of the new user

- –disabled-password removes the need for a password for the new user

- –gecos “” is a short way to set several general information about the account like “real name” or “phone number” – as we only pass an empty string, the corresponding fields will be initialized empty and the operating system will not ask these information when the user logs in for the first time

We then immediately hand over the rights to the app folder to this new user with chown -R appuser /app and switch to it with USER appuser.

Then comes the important “build” stage where we publish the app with Native AOT. We start from the mcr.microsoft.com/dotnet/sdk:8.0.100-rc.2-alpine3.18 image (once .NET 8 is officially released, you can most likely use the mcr.microsoft.com/dotnet/sdk:8.0-alpine3.18 image, see the Microsoft Artifact Registry for details) and update as well as upgrade the repos of Alpine’s APK package manager with apk update && apk upgrade to install clang, build-base, and zlib-dev. These packages are required for Native AOT builds on Alpine – you can find information about build prerequisites for Native AOT in this Microsoft Learn article.

Finally, we restore NuGet packages and publish across multiple layers so that the first step can be cached to reduce the time it takes to rebuild the image. We simply copy over the csproj file and then call dotnet restore on it, passing in the Runtime Identifer with the $RUNTIME_ID build argument. By default, it is set to linux-musl-x64, but you can easily change it to e.g. linux-musl-arm64 to build for arm-based systems. Afterwards, we copy over the rest of the source files and call dotnet publish to build and publish the whole app in Release mode in one Dockerfile layer – remember that PublishAot is turned on in our csproj file.

The published Native AOT app then resides in the /app top-level folder. We can simply copy it over from the build stage to the final stage with the COPY –chown=appuser –from=build /app/WebApp ./WebApp command (which also transfers ownership to appuser in one go). Please note that we only copy over the single executable file, not the .dbg file and no appsettings.json files (you want to configure the app using environment variables instead). Furthermore, our Native AOT deployment has no further runtime dependencies – our app runs on a plain vanilla Alpine 3.18 image, just copy and execute the binary and you are good to go.

The Drawbacks

So, we’ve seen a very notable decrease in startup time, memory usage, and docker image size. We no longer need to package any runtime dependencies. Should we all switch to Native AOT now? Probably not, because you will give up a lot of features you might know and love from the .NET ecosystem.

The most important thing is: you cannot use unbound reflection. Native AOT apps are trimmed during publish, i.e. a static code analyzer will check the types and their members from all assemblies and identify if they are called or not. This is done because native code instructions take up way more space than MSIL instructions, so the only way to get to a reasonable binary size is by removing unused code during native code generation.

Unfortunately, this comes with caveats, some of them being:

- No use of unbound reflection, for example reflection-based deserialization of DTOs

- No run-time code generation

- No dynamic loading of assemblies (i.e. no support for plugin-style applications)

- Serializers like Newtonsoft.Json that rely on reflection during serialization and deserialization cannot be used. JSON serialization of DTOs only works with System.Text.Json in combination with Roslyn Source Generators. Its the same thing with deserialization from IConfiguration: Microsoft introduced a dedicated Source Generator that emits the deserilization code to avoid unbound reflection.

- Similarly, Object/Relational Mappers (ORMs) like Entity Framework Core and Dapper that rely use unbound reflection to instantiate entities and populate their properties are not compatible with Native AOT, either. Both of them plan to add support for Source Generators, but this will not be ready when .NET 8 releases.

- No support for ASP.NET Core MVC and SignalR – only Minimal APIs and GRPC can be used to create endpoints and stream data. There is a Minimal API Source Generator that handles endpoint registration with the MapXXX methods.

- No direct support for automated testing of native code: none of the unit test frameworks like xunit and nunit are able to run in Native AOT mode. For now, the .NET team focusses on an analyzer approach so when you build your app and run tests, Roslyn analyzers will create warnings that will hint to potential problems when running in Native AOT mode. Please note that your app will run in the regular CLI mode when you use dotnet run, dotnet test, or similar functionality from your IDE – Native AOT will only take effect when your app is published.

- Limited debugging of native code: after publishing, your app is a true native binary, and thus the managed debugger for C# will not work with it. We need to use debuggers like gdb or WinDbg after deployment. You also require the debugging symbols (.dbg or .pdb files) for a useful debugging experience.

Conclusion

Native AOT is an interesting development for ASP.NET Core and it will probably gain more traction in upcoming releases like .NET 9 and 10. The main purpose is in Cloud-Native scenarios where you prefer microservices with a smaller image size and memory footprint and decreased startup time. This is also true for serverless functions that need to start up quickly – in these scenarios, Native AOT can shine. When running in regular CLR mode, the JIT needs to compile a lot of code at startup (especially the static methods/members) which can be completely omitted in Native AOT mode. But do not forget: code generated by Native AOT is not necessarily faster. In fact, the tiered JIT compilation process can take into account the target system (because it runs on it right next to your code) – this is something that the native code created at compile time cannot take into account.

Libraries and frameworks still need to catch up with Native AOT support. Code that currently relies on unbound reflection must be transformed to code generated at compile time, most likely via Source Generators. It will be interesting to see whether developers will adopt this deployment model once more third-party frameworks and libraries will support it – especially Entity Framework Core will be important here. The next years will also be very interesting in how Native AOT can be tuned further to compete with the likes of Go and Rust.