For the scope of this article, we will take a look at

- Implementing authentication (AuthN) and authorization (AuthZ) for Azure Kubernetes Service (AKS) with Azure Active Directory (AzureAD)

- Implement custom network policies to restrict in-cluster communication

Although the topics addressed in this article are explained in the context of Microsoft Azure, concepts could be transferred and applied to other environments providing managed Kubernetes clusters such as AWS or GCP.

Article Series

- Part 1: Code, Container, Cluster, Cloud: The 4C’s of Cloud-Native Security

- Part 2: Container Security: The 4C’s of Cloud-Native Security

- Part 3: Cluster Security: The 4C’s of Cloud-Native Security ⬅

- Part 4: Code Security: The 4C’s of Cloud-Native Security

- Part 5: Cloud Security: The 4C’s of Cloud-Native Security

Implementing AuthN and AuthZ for AKS with AzureAD

When using Azure’s managed Kubernetes offering AKS, you can configure seamless integration with AzureAD to authenticate users based on OAuth 2.0 and OpenID Connect. On top of pure authentication, AKS and AzureAD integration allow you to configure authorization based on role-based access control (RBAC).

Both AzureAD based AuthN and AuthZ can be configured when creating new AKS clusters or enabled later on existing clusters.

Configure AzureAD based AuthN for AKS

To enable AzureAD based AuthN, you need an AzureAD group containing all users that should become Kubernetes cluster administrators:

AKS_NAME=aks-sample

AKS_RG_NAME=rg-aks-sample

GROUP_NAME=k8s-admins

# create an AAD group for k8s admins

groupId=$(az ad group create --display-name $GROUP_NAME \

--description "AKS Administrators" \

--mail-nickname "AKS-Administrators" \

--query "objectId" -o tsv)

# add users to the k8s admins group

az ad user list --query "[department=='Development'].objectId" \

-o tsv | xargs -I _ az ad group member add --group $GROUP_NAME --member-id _

# enable AAD AuthN for existing AKS cluster

az aks update -n $AKS_NAME -g $AKS_RG_NAME \

--enable-aad \

--aad-admin-group-object-ids $groupId

Once AzureAD Authentication has been enabled, you can download the credentials (for the currently authenticated user of az) using the following command:

AKS_NAME=aks-sample

AKS_RG_NAME=rg-aks-sample

# Get AKS credentials for current user

az aks get-credentials -n $AKS_NAME -g $AKS_RG_NAME

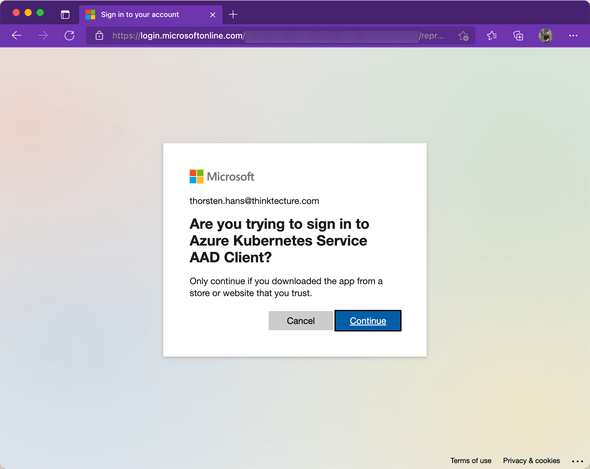

The az aks get-credentials command will also switch the context of your local kubectl installation to point to that particular AKS instance. You just have to browse through the cluster using kubectl to challenge the authentication. For example, ask for the list of namespaces (kubectl get ns). Once you’ve invoked the command, AzureAD integration will invoke the authentication flow (device code flow). Follow the instructions shown on your terminal, authenticate, and consent using a web browser as shown here:

Once you have authenticated kubectl with AzureAD to access the AKS cluster on your behalf, the kubectl get ns command should print the desired Kubernetes namespaces to the terminal. If you don’t have access to an AKS cluster, you can also create a new AKS cluster and enable AAD integration straight from the beginning. If that’s the case, consider using the following commands:

# create a new AKS cluster with AAD integration enabled

AKS_NAME=aks-sample

AKS_RG_NAME=rg-aks-sample

GROUP_NAME=k8s-admins

# create an AAD group for k8s admins

groupId=$(az ad group create --display-name $GROUP_NAME \

--description "AKS Administrators" \

--mail-nickname "AKS-Administrators" \

--query "objectId" -o tsv)

# add users to the k8s admins group

az ad user list --query "[department=='Development'].objectId" \

-o tsv | xargs -I _ az ad group member add --group $GROUP_NAME --member-id _

az aks create -n $AKS_NAME -g $AKS_RG_NAME \

--enable-aad \

--aad-admin-group-object-ids $groupId

Having authentication in place, we can move on and take care of tailored authorization.

Configure AAD based AuthZ for AKS

AzureAD based authorization for AKS allows you to “outsource” your authorization definition to AzureAD instead of managing it directly in Kubernetes. Microsoft provides four built-in roles for AKS authorization:

- Azure Kubernetes Service RBAC Reader: Allows read-only access to see most objects in a namespace. It doesn’t allow viewing roles or role bindings. This role doesn’t allow viewing Secrets since reading the contents of Secrets enables access to ServiceAccount credentials in the namespace, which would allow API access as any ServiceAccount in the namespace (a form of privilege escalation)

- Azure Kubernetes Service RBAC Writer: Allows read/write access to most objects in a namespace. This role doesn’t allow viewing or modifying roles or role bindings. However, this role allows accessing Secrets and running Pods as any ServiceAccount in the namespace, so it can be used to gain the API access levels of any ServiceAccount in the namespace.

- Azure Kubernetes Service RBAC Admin: Allows admin access, intended to be granted within a namespace. Allows read/write access to most resources in a namespace (or cluster scope), including the ability to create roles and role bindings within the namespace. This role doesn’t allow write access to resource quota or to the namespace itself.

- Azure Kubernetes Service RBAC Cluster Admin: Allows super-user access to perform any action on any resource. It gives full control over every resource in the cluster and in all namespaces.

AzureAD AuthZ can be enabled for both new and existing AKS clusters. Again let’s take a look at enabling AzureAD based AuthZ on an existing AKS cluster:

AKS_NAME=aks-sample

AKS_RG_NAME=rg-aks-sample

# enable AAD AuthZ

az aks update -n $AKS_NAME -g $AKS_RG_NAME \

--enable-azure-rbac

# query AKS ID

aksId=$(az aks show -n $AKS_NAME -g $AKS_RG_NAME --query "id" -o tsv)

# Get AAD User Id for the currently authenticated user

aadUserId=$(az ad signed-in-user show --query objectId -o tsv)

# create a role assignment for the currently authenticated user

az role assignment create --role "Azure Kubernetes Service RBAC Reader" \

--assignee $aadUserId \

--scope $aksId

When you want to enable AzureAD AuthZ while creating a new AKS cluster, ensure you pass the --enable-azure-rbac flag as shown here:

AKS_NAME=aks-sample

AKS_RG_NAME=rg-aks-sample

# create a new AKS cluster with AAD AuthZ

az aks create -n $AKS_NAME -g $AKS_RG_NAME \

--enable-aad \

--enable-azure-rbac

Custom role definitions for AzureAD based AuthZ

On top of the four built-in roles, you can create your own role definitions to configure fine granular authorization. For example, consider the following example that creates a custom role definition to authorize users to read and write ConfigMaps:

Take the following snippet, replace <SubscriptionId> with the result of the the az account show --query id -o tsv command, and store it in a local file called config-map-contrib.json:

{

"Name": "AKS ConfigMap Contributor",

"Description": "Lets you read/write all configmaps in cluster/namespace.",

"Actions": [],

"NotActions": [],

"DataActions": [

"Microsoft.ContainerService/managedClusters/configmaps/read",

"Microsoft.ContainerService/managedClusters/configmaps/write"

],

"NotDataActions": [],

"assignableScopes": [

"/subscriptions/<SubscriptionId>"

]

}

With the customized config-map-contrib.json in place, create the role definition:

# Create the custom role definition

az role definition create --role-definition @config-map-contrib.json

Finally, you’re able to assign the custom role definition to a particular user:

AKS_NAME=aks-sample

AKS_RG_NAME=rg-aks-sample

# Get AAD User Id for the currently authenticated user

aadUserId=$(az ad signed-in-user show --query objectId -o tsv)

# Get AKS Id

aksId=$(az aks show -n $AKS_NAME -g $AKS_RG_NAME --query id -o tsv)

# Assign custom role definition to a user

az role assignment create --role "AKS ConfigMap Contributor" \

--assignee $aadUserId \

--scope $aksId

Implement custom network policies to restrict in-cluster communication

By default, there are no network communication restrictions inside a Kubernetes cluster, which means that pods can communicate with each other, whether if they run in the same or different namespaces of the cluster. Although this behavior is beneficial for cloud-native applications and their components, you should consider implementing custom network policies to control and limit network connectivity between those components and enforce the principle of least privileges.

By providing custom NetworkPolicy configurations in Kubernetes, you can control ingress and egress communication capabilities for any pod in your cluster. When creating an AKS cluster, you’ve to choose a policy option that can’t be changed once the cluster has been created. Currently, Azure offers Azure Network Policies and Calico Network Policy.

Although both policy options fully support Kubernetes’ NetworkPolicy specification, you must understand the limitations and differences between both options. See this capability comparison, before locking your decision.

Once you’ve chosen the network policy option and created your cluster, you can start implementing network policies to restrict communication channels in your cluster. For demonstration purposes, we will make a new NetworkPolicy that will allow inbound connections to BackendA only from Pods in the same Namespace (dev) with the role: frontend label assigned:

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: backend-a-ingress

namespace: dev

spec:

podSelector:

matchLabels:

app: sample-app

role: backend-a

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: dev

podSelector:

matchLabels:

app: sample-app

role: frontend

In addition to controlling inbound (ingress) connectivity, you can also control how outbound (egress) connectivity should behave. For example, let’s prevent Pods in the Namespace dev with the role: docs label from establishing outbound connections:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: docs-deny-all-egress

namespace: dev

spec:

podSelector:

matchLabels:

app: sample-app

role: docs

policyTypes:

- Egress

egress: []

When applying this policy, the Docs component won’t establish any outbound connection. Additionally, code running in the corresponding pods won’t resolve IP addresses using DNS. If you want to enable DNS while still blocking the actual outbound connection, you can update the egress policy and white-list DNS ports (53/tcp and 53/udp) as shown here:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: docs-deny-all-egress

namespace: dev

spec:

podSelector:

matchLabels:

app: sample-app

role: docs

policyTypes:

- Egress

egress:

- ports:

- port: 53

protocol: TCP

- port: 53

protocol: UDP

Recap

This article looked at the samples of securing a Kubernetes cluster in Azure. The shown concepts are just a fraction of the overall security controls and considerations you should have on the list when running production workloads in the Kubernetes. You should also consider numerous other things like enforcing tailored policies on your cluster, implementing resource quotas and limit ranges or enforcing limited access to the host network.

However, implementing AuthN and AuthZ based on AzureAD is an excellent example to demonstrate how strong the integration of AKS with surrounding Azure cloud services and capabilities is. The AKS team is continuously pushing the boundaries and releasing more and more add-ons for AKS so we can easily extend, harden, and integrate our AKS instances with ease.

By adding tailored network policies using the NetworkPolicy specification, you can control in-cluster communication and isolate critical components even within shared cluster environments.